A Practical Guide to Semantic Mapping in Healthcare Data

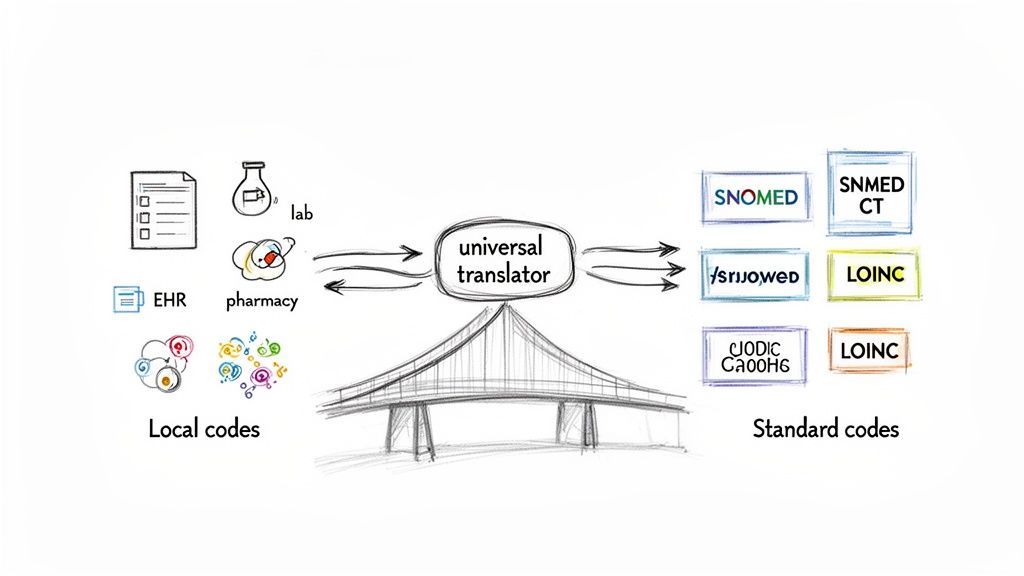

At its heart, semantic mapping is about creating a meaningful link between different data models. It's more than just connecting columns in a spreadsheet; it’s about translating the intent behind the data. This ensures that "Hypertension" in one system is understood as the exact same concept as "High Blood Pressure" in another.

This translation is the bedrock of any intelligent, unified data ecosystem.

Decoding the Language of Healthcare Data

Think of a hospital where the electronic health record (EHR), the pharmacy system, and the lab all speak different dialects. Each one uses its own local codes and terms to describe the very same clinical event. This is a digital Tower of Babel, creating fragmented data silos that get in the way of real progress. Semantic mapping serves as the universal translator in this chaotic environment.

It’s the critical bridge connecting all these disparate data sources by aligning their underlying meaning. Without it, data stays locked in its original system, its true potential unrealized. It's no surprise that a recent Gartner survey found 44% of AI-ready organizations see semantic alignment as a crucial step in preparing their data for artificial intelligence.

Why Semantic Mapping Is Non-Negotiable

This translation isn't just a technical box to check; it’s a foundational requirement for modern healthcare analytics and operations. By establishing a common language, semantic mapping tackles several major problems head-on:

- It breaks down data silos. Information from different sources, like diagnosis codes from an EHR and a lab’s test results, can finally be woven together into a single, cohesive patient record.

- It enables advanced analytics. Researchers can now conduct large-scale studies across multiple institutions, confident they are comparing apples to apples. This is absolutely essential for clinical trials and population health initiatives.

- It powers reliable AI. Machine learning models are only as good as the data they're trained on. Semantic mapping ensures the data fed into these models is consistent, clean, and meaningful, leading to far more accurate predictions.

At its core, semantic mapping transforms a collection of raw, disconnected data points into a coherent narrative. It's the process that lets us see the complete patient journey, connecting diagnoses, medications, procedures, and outcomes into one understandable story.

A Practical Example in Healthcare

Let's look at a common scenario. A local hospital uses an internal code, "HBP-01," to track a hypertension diagnosis. A national research database, however, requires the standardized SNOMED CT code "59621000" for "Essential hypertension." Semantic mapping is what creates that explicit link: HBP-01 means the same thing as 59621000.

When you apply this seemingly simple connection across millions of records, you achieve true interoperability. It allows different systems to exchange information without any ambiguity, paving the way for more effective research, better clinical decisions, and smarter healthcare technologies. For a deeper dive into how this kind of conceptual understanding is applied in search technology, you might find it useful to learn about semantic vector search for your website.

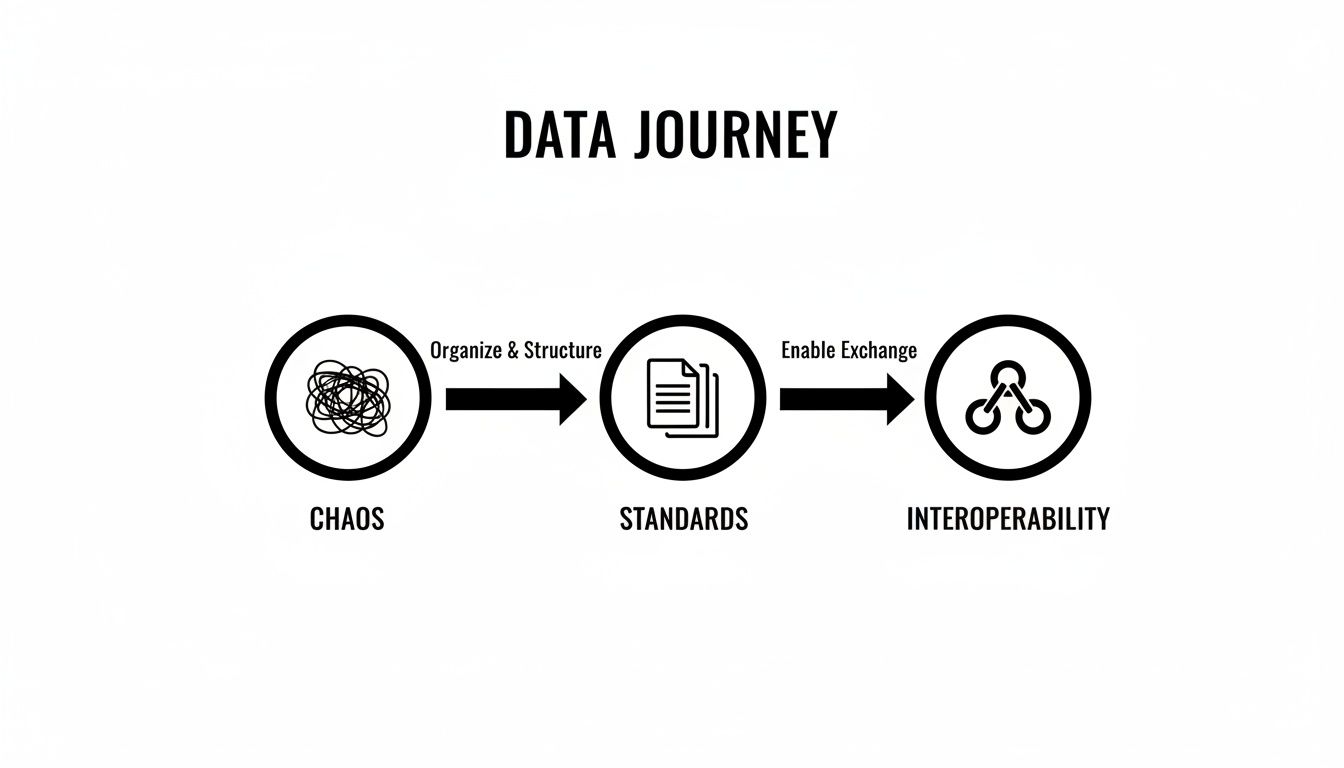

The Journey from Data Chaos to Interoperability

The big push for electronic health records (EHRs) in the early 2010s was meant to be a solution. Instead, it created a whole new kind of problem. We suddenly had a tidal wave of digital health information, but it was all trapped in separate, incompatible systems, each speaking its own language.

This digital boom laid bare a fundamental flaw in the healthcare ecosystem: we had no common vocabulary. Hospitals and research institutions were sitting on mountains of data but struggled to find any real insight because they couldn't connect the dots. The promise of a single, unified view of a patient's journey felt more distant than ever.

The Challenge of Outdated Codes

A big part of the problem was the coding systems themselves. Older standards like ICD-9 were fine for billing but completely out of their depth for sophisticated clinical research, especially across borders. Different countries used their own tweaked versions, and the codes just didn't have the clinical detail—the granularity—needed for any kind of deep analysis.

This created massive data mismatches. In early multinational trials, it wasn't uncommon to see 30-40% data mismatch rates simply due to conflicting coding systems and local EHR vocabularies. For researchers, this was more than just an annoyance; it was a fundamental barrier to discovery. Pooling data to draw reliable, global conclusions was next to impossible.

The inability to harmonize data meant that a patient's story was fragmented across different systems. A diagnosis in one hospital couldn't be accurately compared to a similar one in another, hindering the large-scale research needed to fight complex diseases.

The Rise of Modern Standards

All this chaos eventually sparked a movement toward standardization. Visionary groups like the Observational Health Data Sciences and Informatics (OHDSI) collaboration came together to champion a new way forward. They understood a simple truth: for data to be truly useful, it needs a common structure and a common language.

This realization led to the adoption of more modern, robust terminologies capable of capturing the true richness of clinical information:

- SNOMED CT: A comprehensive, scientifically-backed collection of clinical terms covering everything from diseases to findings and procedures.

- LOINC: The go-to standard for identifying medical laboratory observations.

- RxNorm: A normalized naming system for both generic and branded drugs, cutting through the confusion of different drug names.

These vocabularies gave us the building blocks for a shared understanding. The next piece of the puzzle was figuring out a blueprint for organizing the data itself.

A New Framework for Data Harmony

The OMOP Common Data Model (CDM) became that blueprint. Developed by the OHDSI community, the OMOP CDM provided a standardized structure—a common format—that could transform wildly diverse datasets into a single, cohesive form. The entire framework was built on the principle of semantic mapping.

The idea was to stop creating a messy web of point-to-point connections and instead map every local data source to one common target model. As these standards matured, semantic mapping became the cornerstone of interoperability. In fact, a systematic review found that over 50% of research on FHIR semantic interoperability was focused on mapping clinical terminologies to SNOMED CT and LOINC.

This shift has been powerful. Modern semantic mapping tools have since cut down mapping errors by 25% in OMOP-based studies, unlocking analytics on a staggering 1.2 billion patient records worldwide.

It was a total game-changer. This approach finally paved the way for the large-scale, federated analytics that are possible today, transforming isolated data points into a powerful, coordinated ecosystem ready for discovery. To learn more, check out our guide on essential healthcare interoperability solutions.

Understanding Core Semantic Mapping Methods

To really get what semantic mapping is all about, you have to look under the hood at the different engines that make it work. Each method offers a unique way to connect the dots between data points, and each comes with its own set of pros and cons. Picking the right one isn't a one-size-fits-all decision; it comes down to the specifics of your project, how messy your data is, and the resources you have on hand.

This journey from tangled, chaotic data to a standardized, interoperable asset is precisely what semantic mapping is designed to accomplish.

Getting this right is the bedrock of any reliable data foundation, making everything from large-scale analytics to trustworthy AI possible. Let's break down the three fundamental approaches people use to get there.

Rule-Based Mapping: The Custom Dictionary

The most direct approach is rule-based mapping. Think of it as building a custom phrasebook from scratch. You sit down and manually write out explicit instructions that connect one code to another. A classic example would be a rule like IF source_code = 'HBP-01' THEN target_code = '59621000'.

This gives you absolute control and pinpoint accuracy, which is great for datasets that are well-understood and don't change much. The downside? It's incredibly labor-intensive and fragile. The moment a new source code appears or an old one gets updated, you have to go back in and manually fix your rules.

The bottom line: Rule-based mapping is precise but doesn't scale. It shines in smaller, stable projects where every single connection needs to be defined and signed off on by a human expert.

Ontology-Driven Mapping: The Family Tree

Next up is ontology-driven mapping, which takes a much smarter route. Instead of one-to-one rules, this method taps into the built-in structure of established vocabularies like SNOMED CT. The key is to stop thinking of these vocabularies as flat lists and see them for what they are: intricate family trees where every concept has defined relationships, like "is a type of" or "is a part of."

This approach uses those hierarchical connections to find logical mappings. For instance, if you have a local code for "acute viral pneumonia," an ontology-driven system can figure out its relationship to the broader SNOMED CT concept of "Viral pneumonia" simply by walking up the branches of the vocabulary's tree. It's far more dynamic and less likely to break than a simple rule-based system.

OMOPHub Tip: For those who want to get hands-on, you can programmatically explore these relationships using tools like the OMOPHub Python SDK or the OMOPHub R SDK. For more detailed guidance, the official API documentation is your best friend.

Machine Learning and NLP: The Smart Assistant

The most sophisticated methods bring in Machine Learning (ML) and Natural Language Processing (NLP). These systems work like a smart assistant, learning from massive amounts of existing data to predict the most likely mappings. By analyzing patterns in text descriptions, code frequencies, and previously validated maps, they can suggest connections with impressive accuracy.

For example, an ML model could read the text "patient presents with severe high blood pressure" and confidently suggest the SNOMED CT code for "Essential hypertension" because it recognizes the semantic similarity. These models are fantastic for tackling ambiguity and can scale to enormous datasets, drastically cutting down on manual work. While the concept is often applied to text, its principles are also visible in other fields, like semantic image segmentation.

But they aren't magic. Even the best models need a "human-in-the-loop" to validate their suggestions, because in healthcare, clinical context is everything.

A Comparison of Semantic Mapping Methods

Choosing your strategy means weighing the trade-offs between precision, scale, and the sheer amount of human effort involved. This table breaks down how the three core methods stack up against each other.

| Method | Core Principle | Pros | Cons | Best For |

|---|---|---|---|---|

| Rule-Based | Manual "if-then" logic | High precision, full control | Brittle, high manual effort, poor scalability | Small, static datasets with strict validation needs |

| Ontology-Driven | Using vocabulary hierarchies | More scalable, leverages existing knowledge | Requires well-structured ontologies | Mapping within standardized terminologies like SNOMED |

| ML/NLP-Based | Learning from data patterns | Highly scalable, handles ambiguity, reduces manual work | Needs training data, requires human validation | Large, complex, or ambiguous datasets |

In the real world, the best data pipelines often blend these approaches. A common workflow is to use ML to generate a list of candidate mappings, have experts use ontology-driven tools to review and approve them, and then save those final, validated connections as concrete rules for the production ETL process. It’s about using the right tool for the right part of the job.

How the OMOP CDM Solves Key Mapping Challenges

Let's be honest: semantic mapping in healthcare is a minefield. The biggest problem? The sheer number of different coding systems out there. A single hospital might be juggling dozens of local, proprietary codes for things like diagnoses and procedures. To make that data useful for large-scale research, every single one of those codes needs to be accurately translated into universal standards like SNOMED CT, LOINC, and RxNorm.

That translation work is rarely straightforward. Clinical terms are notoriously ambiguous—the same word can mean totally different things depending on the context. To make matters worse, the standard vocabularies we map to are always evolving to keep up with medical science. That means the perfect mapping you create today could be obsolete tomorrow, turning data maintenance into a never-ending, resource-draining headache.

The Power of a Single, Stable Target

This is exactly where the Observational Medical Outcomes Partnership (OMOP) Common Data Model (CDM) comes in. It was built specifically to tackle these problems.

Instead of getting tangled in a chaotic web of point-to-point mappings between countless source systems, the OMOP CDM gives you a single, stable, and standardized destination. Think of it as the ultimate "Rosetta Stone" for health data.

Suddenly, the whole mapping process gets a lot simpler. You're no longer trying to connect System A to System B, System C, and so on. Your only job is to solve one problem, over and over: how to map each source system to the OMOP CDM.

The OMOP CDM transforms the complex "many-to-many" mapping problem into a much more manageable "many-to-one" challenge. This fundamental shift is what enables the harmonization of data from diverse global sources, unlocking the potential for federated research at an unprecedented scale.

This centralized approach doesn't just cut down the initial workload; it makes long-term maintenance far easier. When a source system gets an update, you only have to fix its one map to the OMOP CDM. Everything else remains untouched.

A Common Language for Clinical Concepts

The real genius of the OMOP CDM is its reliance on standardized vocabularies. It's more than just a structural blueprint; it enforces a common language for every piece of clinical information.

Every event—a diagnosis, a drug exposure, a lab result—gets mapped to a standard concept ID from a controlled vocabulary like SNOMED CT or RxNorm. This forces a level of semantic consistency right from the start.

It cuts through the ambiguity. A diagnosis of "Essential hypertension" is always represented by the same standard concept ID (59621000), no matter if the source data called it "high blood pressure," "HBP," or used a local ICD-10-CM code.

This deep integration of standard terminologies provides a powerful framework that solves several critical issues all at once:

- Manages Vocabulary Updates: The OHDSI community centrally manages the vocabularies and provides regular updates, which can be systematically applied across the entire data network.

- Enforces Consistency: By forcing all data to conform to a standard set of concepts, it ensures that queries and analytical models produce results you can actually trust and compare.

- Clarifies Ambiguity: It makes you document your decisions, forcing you to be explicit about how you map fuzzy local terms to precise standard concepts.

OMOPHub Tip: Keeping track of vocabulary versions is crucial. You can check which vocabularies and versions are available through the OMOPHub API by looking at the official OMOPHub vocabularies documentation. This is the best way to make sure your mappings use the correct and most current terminology sets—a non-negotiable step for building a reliable data pipeline. For a more detailed exploration of the model's architecture, you can read our comprehensive guide on the OMOP Common Data Model.

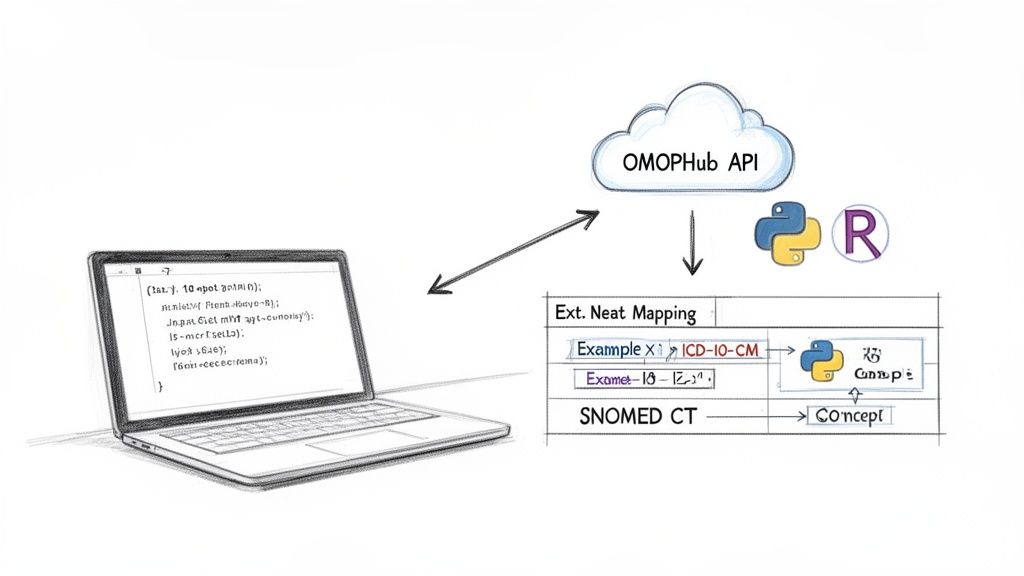

Practical Semantic Mapping with the OMOPHub SDK

It's one thing to understand semantic mapping in theory; it's another to actually make it work. For data engineers and clinical researchers, the biggest roadblock has always been the infrastructure. In the past, this meant downloading, hosting, and trying to maintain massive vocabulary databases—a tedious process that eats up resources and is a nightmare for version control.

This is where a developer-first platform completely changes the equation. Instead of wrestling with local databases, you get instant API access to the vocabularies you need. This approach eliminates the entire burden of vocabulary management. For teams working with OMOP, a platform like OMOPHub lets you automate mappings between SNOMED CT, ICD-10, and RxNorm without touching a single local file, cutting setup time from weeks to just a few minutes. You can see the real-world impact of this in a recent JMIR publication.

With an API-driven model, your team can plug powerful mapping capabilities directly into your existing data pipelines almost immediately.

Getting Started with the OMOPHub SDK

Putting this into practice is surprisingly simple. The OMOPHub SDKs, available for both Python and R, are built for quick integration. Once you install the SDK and set up your API key, you can start interacting with a huge library of standardized vocabularies programmatically.

A common first step is just looking up a concept. Let's say you're trying to find the standard OMOP concept ID for the ICD-10-CM code "I10," which stands for Essential Hypertension.

With the Python SDK, the code is about as clean as it gets:

import os

from omophub import OMOPHub

# Initialize the client with your API key

client = OMOPHub(api_key=os.environ.get("OMOPHUB_API_KEY"))

# Search for the concept by its code and vocabulary

response = client.concepts.search(

query="I10",

vocabulary_id=["ICD10CM"]

)

# Print the standard concept ID

print(response.items[0].concept_id)

# Expected Output: 320128

This simple query quickly finds the concept and gives you its standard ID (320128). That ID is the key to all your other mapping tasks.

Navigating Vocabulary Hierarchies

But real semantic mapping isn't just a simple lookup. It's about understanding the relationships between different concepts. A crucial task is mapping a very specific, granular source code to its more general, standard equivalent—this is how you achieve consistency in an ETL pipeline.

For example, you might have a local billing code that needs to be mapped to a standard SNOMED CT concept. The SDK lets you walk through these relationships in your code.

One of the most powerful features of the OMOP CDM is how it uses vocabulary hierarchies to build logical connections. By programmatically exploring these "is a" or "maps to" relationships, you can create automated mapping logic that is both durable and context-aware.

Think about mapping between different coding systems, like updating older codes to modern ones. If you're working with historical data, these relationships are absolutely essential. We dive deeper into this in our guide on ICD-10 to ICD-9 conversion.

Building Mapping Tables for Your ETL Pipeline

Armed with these tools, you can automate the creation of the mapping tables that fuel your ETL processes. Forget about manually updating a static spreadsheet. Now, you can write a script that hits the API and builds these tables on the fly.

This has some clear advantages:

- Accuracy: Your mappings are always based on the latest, centrally managed vocabularies, so you don't have to worry about using outdated or deprecated codes.

- Scalability: The process scales effortlessly. You can handle thousands or even millions of local source codes without adding more manual work.

- Reproducibility: Your mapping logic is captured in code. This makes the whole process transparent, easy to version, and simple to rerun or adjust later.

OMOPHub Tip: When you're ready to build, always check the official documentation for detailed examples. For instance, the get concept relationships endpoint documentation gives you all the parameters needed to build sophisticated queries. By embedding these API calls directly into your data pipelines, you're creating a sustainable, auditable workflow for all your semantic mapping.

Best Practices for High-Quality Semantic Maps

Creating a semantic map is one thing; keeping it accurate and trustworthy over time is a completely different challenge. It's not just about the initial setup. You need a deliberate strategy that marries technical precision with deep clinical oversight to build something that lasts.

Without this, even the most advanced automated mapping can quietly inject errors into your data pipelines. Before you know it, you're looking at flawed analyses and drawing the wrong conclusions.

The bedrock of any solid mapping project is good governance. This isn't just bureaucracy—it means having clear ownership and a validation workflow that puts clinical experts in the driver's seat. AI and machine learning tools are fantastic for suggesting potential matches, but they simply don't have the nuanced, real-world understanding to make the final call. A human-in-the-loop process isn't just a good idea; it's non-negotiable.

A semantic map is not a one-time project. Think of it as a living asset that needs active management. Treating it as a "set it and forget it" task is the fastest way to corrupt your data and torpedo your insights.

Establish Robust Governance and Validation

The single most important practice is to formalize your review process. Every single mapping, whether it came from an algorithm or a data analyst, needs to be reviewed and signed off by a subject matter expert. This step is what confirms the clinical accuracy and ensures the mapping logic actually fits what you're trying to achieve.

Good governance also demands obsessive documentation. For every mapping decision, you should be able to answer:

- The rationale: Why this target concept and not another? What was the thinking?

- The validator: Who signed off on this mapping, and on what date?

- The vocabulary versions: Exactly which versions of the source and target vocabularies were used at the time?

This paper trail becomes your audit log. It’s absolutely essential for troubleshooting problems down the line and understanding how your map has evolved.

Manage Vocabulary Versioning Proactively

Think about it: medical knowledge is always changing, and standard vocabularies like SNOMED CT and LOINC are updated constantly to keep up. These updates can make old concepts obsolete, shift hierarchies around, or add more specific terms. A map built on an old vocabulary version will degrade in quality surprisingly fast.

This is why proactive version management is so critical for long-term success. You must have a process for regularly checking and updating your maps whenever new vocabulary versions are released. This is where tools like the OMOPHub API can make a huge difference. They give you programmatic access to versioned vocabularies, which lets you build automated checks to flag mappings that a recent update might have broken. You can see the available vocabularies and their versions in the official OMOPHub documentation.

Avoid Common Pitfalls

Finally, a few common mistakes can completely derail mapping quality. One of the biggest is relying only on simple text or string matching. The word "cold" could mean a low temperature or the common cold—only human context can tell them apart.

Another classic pitfall is failing to document the "why" behind your mapping logic. This turns your map into a black box that's impossible for anyone else to maintain or validate later. By combining expert validation, proactive version management, and crystal-clear documentation, you can build a semantic mapping framework that truly delivers reliable, high-quality data for years to come.

Common Questions About Semantic Mapping

Even after you've got the methods and best practices down, some real-world questions always pop up during a semantic mapping project. Let's tackle a few of the most common ones to clear up any lingering confusion.

What Is the Difference Between Data Mapping and Semantic Mapping?

This is a big one. Think of it this way: data mapping is about the structure, while semantic mapping is all about the meaning.

Data mapping is the simpler first step. It’s like connecting the pipes—you link a source column like patient_dob to a target column called birth_date. You're just making sure the data gets from point A to point B.

Semantic mapping, on the other hand, cares about what’s inside the pipes. It translates the specific values, like turning a local diagnosis code 401.9_HYPERTEN into the globally understood SNOMED CT concept 59621000, which stands for "Essential Hypertension."

In short: Data mapping moves the box from one shelf to another. Semantic mapping reads the label on the box to make sure everyone understands what's inside.

How Do I Handle Vocabulary Updates?

Vocabularies are constantly changing, and this can feel like trying to hit a moving target. The only sane way to manage this is to version your maps right alongside the vocabularies they're built on. When a new version of SNOMED or LOINC comes out, you have to revisit your maps to see what's been deprecated, what's changed, and what's brand new.

This used to be a massive manual headache. Thankfully, modern tools are designed to handle this. For instance, a platform like OMOPHub gives you programmatic access to versioned vocabularies—something you can see in their official documentation. This means you can build scripts to automatically flag outdated concepts, making the update process controlled, repeatable, and far less likely to break your data pipelines down the line.

Can AI Completely Automate Semantic Mapping?

Not yet, and in a clinical setting, probably not ever. While AI and machine learning models are fantastic at accelerating the grunt work, they can't fully replace human clinical judgment.

AI is brilliant at generating a list of high-quality candidate mappings for a human to review. But the subtle context of clinical data—where a slight misinterpretation can have serious consequences—demands a "human-in-the-loop."

A clinical domain expert must always have the final say, validating the AI's suggestions to guarantee accuracy and patient safety. It’s best to think of AI as an incredibly efficient assistant that can take on 80% of the manual effort, freeing up your experts to do what they do best: apply their deep knowledge. The final sign-off is, and should be, a human responsibility.

Ready to stop wrestling with vocabulary databases and accelerate your data harmonization? OMOPHub provides instant API access to standardized medical vocabularies, letting you build robust, automated semantic mapping pipelines in minutes. Check out the platform at https://omophub.com.